Improving my e-mail IP reputation automation script

Introduction

In Improving e-mail IP reputation with automation, I described a Python script that selects a random e-mail from a large list of e-mails, sends it to a target e-mail address, and another script which marks all emails at the destination as read.

Originally my solution was working great. My IP reputation was good, and all my legitimate e-mails were being delivered.

The Problem

Unfortunately, I was running this on a small EC2 server, and I was running out of CPU credits and it was also causing excessive disk I/O due to swap usage.

The culprit was the script that daily loaded up a large parquet file, selected 300 e-mails at random from that file, and wrote them out to a directory.

I had originally designed that method (.parquet.brotli -> plain text files -> read file -> send email -> delete file) to save disk space. It had the unintended consequence of trading that disk space for CPU and memory issues on my mail server.

The Solution

Rearchitecture

A rearchitecture was needed. I didn’t want to go to having 1:1 file:e-mail mappings because text is highly compressible and I would be wasting 1.43GB of space. If I go the other direction and make one big file, I’ll run the risk of loading it into RAM each time and causing the same swap issues. I also contemplated writing more complex code that could read an indexed, compressed file, which contains headers pointing to each file descriptor. That was more involved than what I wanted to do. Instead, I compromised.

I decided to chunk out the e-mails in batches of 1,000 into individually wrapped .json.xz (LZMA-compressed) files. Yes, each file is simply a JSON array of e-mails:

|

|

Funny enough, all 518 batches of e-mails is 229MB, whereas my prior solution using brotli compression was 235MB, resulting in an overall disk space savings of 6MB.

Rewriting

I decided if I was going for better efficiency, that I should write it in Rust. It was one of the first projects I ever wrote in Rust, so go easy on me if you view the code! (Why did I not use clap or anyhow?)

Component 1 - parquet to xz conversion

As explained in my prior blog post, I had converted Enron’s e-mails from CSV to .parquet.brotli. I wrote a converter (code here) that would read the parquet file and convert it to hundreds of .json.xz files in my desired format. To do that, I also had to write a parser that could convert the e-mail (so that I can extract the Subject header into its own field).

See the original structure derived from the public CSV and the new format that I converted it to:

|

|

You can see the rest of my format and how I converted the e-mails here.

Component 2 - e-mail sender

Next, I developed the e-mail sender. This is the component which picks a random file, reads it, deserializes it, picks a random e-mail from that file, and sends it. The main logic for this component lives here.

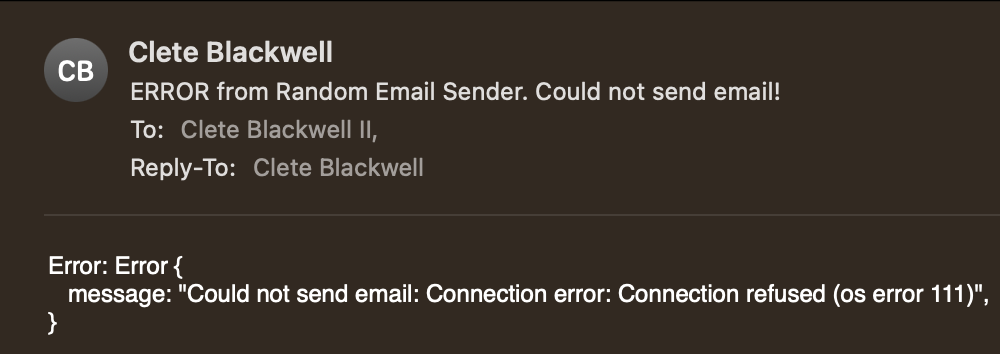

It also has a feature that, if e-mail sending fails, it will send an e-mail to a “failures” address. I use this to notify me if the e-mail hard bounces or the server is unavailable:

In testing, this entire process takes 300-500 milliseconds.

Component 3 - e-mail reader/deleter

The final component logs into the destination e-mail server with IMAP, reads (or deletes, this is configurable) all messages, and exits. This component is implemented here.

Putting it all together

I created a JSON configuration file that contains all the login information needed:

|

|

The passwords can either be loaded in the JSON file as a password key/value in each of the smtp, errorSmtp, or read keys above, or it can be set in smtpPassword, readPassword, and smtpErrorPassword environment variables.

- Download

mailbox_readerandrandom_email_senderfrom my GitHub repo into a folder calledrandom-email-sender - Craft a

config.jsonfile - Download all e-mail

.json.xzfiles (here is a pre-made copy) and place in a subfolder calledemails* - Create cron jobs:

9 * * * * <user> /<path>/random-email-sender/mailbox_reader

3,8,13,18,23,28,33,38,43,48,53,58 * * * * <user> /<path>/random-email-sender/random_email_sender

*: If you don’t trust a .zip file from my website, you can download the original CSV linked in my prior post, run the Python script I provided in the prior post to convert it to .parquet.brotli, and then run my parquet_to_files binary to do the final conversion.

Did I really need this solution?

Probably not. My IP address has had good health for multiple years now, and has been actively whitelisted through Microsoft’s whitelist request process. Still, it makes me feel better knowing that I am actively keeping my delivery health strong.